BCM theory

BCM theory, BCM synaptic modification, or the BCM rule, named for Elie Bienenstock, Leon Cooper, and Paul Munro, is a physical theory of learning in the visual cortex developed in 1981. Due to its successful experimental predictions, the theory is arguably the most accurate model of synaptic plasticity to date.

"The BCM model proposes a sliding threshold for Long-term potentiation or Long-term depression induction and states that synaptic plasticity is stabilized by a dynamic adaptation of the time-averaged postsynaptic activity. According to the BCM model, reducing the postsynaptic activity decreases the LTP threshold and increases the LTD threshold. The opposite applies to the increase in postsynaptic activity."[1]

Contents |

Development

In 1949, Donald Hebb proposed a working mechanism for memory and computational adaption in the brain now called Hebbian learning, or the maxim that cells that fire together, wire together. This law formed the basis of the brain as the modern neural network, theoretically capable of Turing complete computational complexity, and thus became a standard materialist model for the mind.

However, Hebb's rule has problems, namely that it has no mechanism for connections to get weaker and no upper bound for how strong they can get. In other words, the model is unstable, both theoretically and computationally. Later modifications gradually improved Hebb's rule, normalizing it and allowing for decay of synapses, where no activity or unsynchronized activity between neurons results in a loss of connection strength. New biological evidence brought this activity to a peak in the 1970s, where theorists formalized various approximations in the theory, such as the use of firing frequency instead of potential in determining neuron excitation, and the assumption of ideal and, more importantly, linear synaptic integration of signals. That is, there is no unexpected behavior in the adding of input currents to determine whether or not a cell will fire.

These approximations resulted in the basic form of BCM below in 1979, but the final step came in the form of mathematical analysis to prove stability and computational analysis to prove applicability, culminating in Bienenstock, Cooper, and Munro's 1982 paper.

Since then, experiments have shown evidence for BCM behavior in both the visual cortex and the hippocampus, the latter of which plays an important role in the formation and storage of memories. Both of these areas are well-studied experimentally, but both theory and experiment have yet to establish conclusive synaptic behavior in other areas of the brain. Furthermore, a biological mechanism for synaptic plasticity in BCM has yet to be established.[2]

Theory

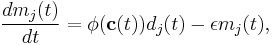

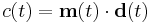

The basic BCM rule takes the form

where  is the synaptic weight of the

is the synaptic weight of the  th synapse,

th synapse,  is that synapse's input current,

is that synapse's input current,  is the weighted presynaptic output vector,

is the weighted presynaptic output vector,  is the postsynaptic activation function that changes sign at some output threshold

is the postsynaptic activation function that changes sign at some output threshold  , and

, and  is the (often negligible) time constant of uniform decay of all synapses.

is the (often negligible) time constant of uniform decay of all synapses.

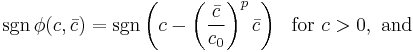

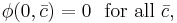

This model is merely a modified form of the Hebbian learning rule,  , and requires a suitable choice of activation function, or rather, the output threshold, to avoid the Hebbian problems of instability. This threshold was derived rigorously in BCM noting that with

, and requires a suitable choice of activation function, or rather, the output threshold, to avoid the Hebbian problems of instability. This threshold was derived rigorously in BCM noting that with  and the approximation of the average output

and the approximation of the average output  , for one to have stable learning it is sufficient that

, for one to have stable learning it is sufficient that

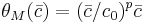

or equivalently, that the threshold  , where

, where  and

and  are fixed positive constants.[3]

are fixed positive constants.[3]

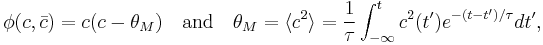

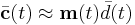

When implemented, the theory is often taken such that

where angle brackets are a time average and  is the time constant of selectivity.

is the time constant of selectivity.

The model has drawbacks, as it requires both long-term potentiation and long-term depression, or increases and decreases in synaptic strength, something which has not been observed in all cortical systems. Further, it requires a variable activation threshold and depends strongly on stability of the selected fixed points  and

and  . However, the model's strength is that it incorporates all these requirements from independently-derived rules of stability, such as normalizability and a decay function with time proportional to the square of the output.[4]

. However, the model's strength is that it incorporates all these requirements from independently-derived rules of stability, such as normalizability and a decay function with time proportional to the square of the output.[4]

Experiment

The first major experimental confirmation of BCM came in 1992 in investigating LTP and LTD in the hippocampus. The data showed qualitative agreement with the final form of the BCM activation function.[5] This experiment was later replicated in the visual cortex, which BCM was originally designed to model.[6] This work provided further evidence of the necessity for a variable threshold function for stability in Hebbian-type learning (BCM or others).

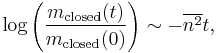

Experimental evidence has been non-specific to BCM until Rittenhouse et al. confirmed BCM's prediction of synapse modification in the visual cortex when one eye is selectively closed. Specifically,

where  describes the variance in spontaneous activity or noise in the closed eye and

describes the variance in spontaneous activity or noise in the closed eye and  is time since closure. Experiment agreed with the general shape of this prediction and provided an explanation for the dynamics of monocular eye closure (monocular deprivation) versus binocular eye closure.[7] The experimental results are far from conclusive, but so far have favored BCM over competing theories of plasticity.

is time since closure. Experiment agreed with the general shape of this prediction and provided an explanation for the dynamics of monocular eye closure (monocular deprivation) versus binocular eye closure.[7] The experimental results are far from conclusive, but so far have favored BCM over competing theories of plasticity.

Applications

While the algorithm of BCM is too complicated for large-scale parallel distributed processing, it has been put to use in lateral networks with some success.[8] Furthermore, some existing computational network learning algorithms have been made to correspond to BCM learning.[9]

References

- ^ Bologna, M., Agostino, R., Gregori, B., Belvisi, D., Manfredi, M., & Berardelli, A. (2010). Metaplasticity of the human trigeminal blink reflex M. Bologna et al. Metaplasticity of the blink reflex. European Journal of Neuroscience, 32(10), 1707-1714. doi:10.1111/j.1460-9568.2010.07446.x.

- ^ Cooper, L.N. (2000). "Memories and memory: A physicist's approach to the brain". International Journal of Modern Physics A 15 (26): 4069–4082. http://physics.brown.edu/physics/researchpages/Ibns/Lab%20Publications%20(PDF)/memoriesandmemory.pdf. Retrieved 2007-11-11.

- ^ Bienenstock, Elie L.; Leon Cooper, Paul Munro (January 1982). "Theory for the development of neuron selectivity: orientation specificity and binocular interaction in visual cortex". The Journal of Neuroscience 2 (1): 32–48. PMID 7054394. http://www.physics.brown.edu/physics/researchpages/Ibns/Cooper%20Pubs/070_TheoryDevelopment_82.pdf. Retrieved 2007-11-11.

- ^ Intrator, Nathan (2006-2007). "The BCM theory of synaptic plasticity". Neural Computation. School of Computer Science, Tel-Aviv University. http://www.cs.tau.ac.il/~nin/Courses/NC05/BCM.ppt. Retrieved 2007-11-11.

- ^ Dudek, Serena M.; Mark Bear (1992). "Homosynaptic long-term depression in area CA1 of hippocampus and effects of N-methyl-D-aspartate receptor blockade". Proc. Natl. Acad. Sci. 89 (10): 4363–4367. doi:10.1073/pnas.89.10.4363. PMC 49082. PMID 1350090. http://www.pnas.org/cgi/reprint/89/10/4363.pdf. Retrieved 2007-11-11.

- ^ Kirkwood, Alfredo; Marc G. Rioult, Mark F. Bear (1996). "Experience-dependent modification of synaptic plasticity in rat visual cortex". Nature 381 (6582): 526–528. doi:10.1038/381526a0. PMID 8632826. http://www.nature.com/nature/journal/v381/n6582/abs/381526a0.html. Retrieved 2007-11-11.

- ^ Rittenhouse, Cynthia D.; Harel Z. Shouval, Michael A. Paradiso, Mark F. Bear (1999). "Monocular deprivation induces homosynaptic long-term depression in visual cortex". Nature 397 (6717): 347–50. doi:10.1038/16922. PMID 9950426. http://www.nature.com/nature/journal/v397/n6717/abs/397347a0.html. Retrieved 2007-11-11.

- ^ Intrator, Nathan (2006-2007). "BCM Learning Rule, Comp Issues". Neural Computation. School of Computer Science, Tel-Aviv University. http://www.cs.tau.ac.il/~nin/Courses/NC05/bcmppr.pdf. Retrieved 2007-11-11.

- ^ Baras, Dorit; Ron Meir (2007). "Reinforcement Learning, Spike-Time-Dependent Plasticity, and the BCM Rule". Neural Computation 19 (19): 2245–2279. doi:10.1162/neco.2007.19.8.2245. PMID 17571943. 2561. http://eprints.pascal-network.org/archive/00002561/01/RL-STDP_Final.pdf. Retrieved 2007-11-11.